An ASU researcher is adding to the conversation about the roles of drones and robots in our lives through a system he created that allows users to control a swarm of robots with their brains.

"The project is about controlling interfaces between humans and robots," Director of the Human-Oriented Robotics and Control Lab, Panos Artemiadis, said. "There has been a lot of research about how humans can control a single robot. What we started working on is how we cause the brain to control multiple robots."

The system works in multiple stages.

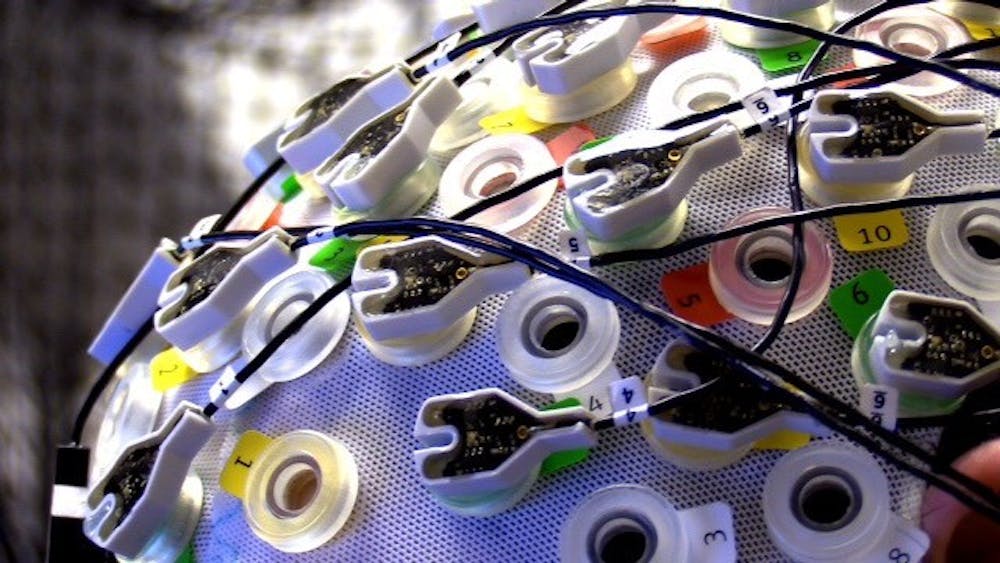

On one end, users wear a device on their heads with a series of 128 electrodes. The electrodes pick up surface brain signals and send that numerically encoded information through a series of algorithms.

The algorithms then decode that information and make it usable for the interface that controls the swarm of robots. Meanwhile the user has real-time information about the position of the robots and their environment relayed to them.

Artemiadis said there is a direct correlation between what the user thinks about the swarm's behavior and how the swarm behaves. In other words, if a user thinks about changing the density or elevation of the swarm, the swarm does so upon receiving the information.

"We've seen trends go from single, large, expensive robots to multiple cheap ones," he said.

Artemiadis said he is optimistic about the application of such a technology — anything requiring multiple robots, like search and rescue, delivery and security can benefit from something like this. A joystick can only control one robot at a time, he said, while the brain can control many more.

"We're building control interfaces," Artemiadis said. "It's like we're building the next keyboard or mouse."

That said, the system does have its limitations. Users need to be laser-focused on the task at hand, or else they lose control of the swarm.

The project has been underway since it received a Department of Defense grant two years ago, and since then, Artemiadis said that he and his researchers have gained a more robust understanding of what brain areas are used in an application like this.

"The main result was to understand if and how the brain cares about swarming behaviors," he said. "The most important discovery was the brain areas that can control a swarm — sensory, motor skills, visuals, planning of motions. Across all areas we see patterns that are related to swarm motion."

Moving forward, the team has started looking at controlling a swarm consisting of both aerial and ground vehicles.

The research team consists of a multidisciplinary group of mechanical and aerospace engineers at the doctoral, masters and undergraduate levels.

George Karavas is a Ph.D student in mechanical engineering who wrote the algorithms to decode and transform the brain information into usable directions for the robots.

"We make the subject think certain things," he said. "The algorithm picks it up, recognizes your brain state, and now there is a matter of matching commands to brain states. After that, you have another algorithm that controls the robots themselves."

He said some of the greatest challenges have been the variations in the brain — no one brain behaves identically, and the same brain may behave differently in different circumstances.

"There are patterns we can detect, but the brains are different," he said. "We proceeded to create an algorithm that can work with any brain. We are not trained as people to control swarms. There is adaptation that needs to happen."

The lab used a process called machine learning to accommodate this, which essentially means that the algorithm learns on its own to recognize patterns and models in the brain.

Another key aspect of the project is the robots themselves and their construction.

Daniel Larsson, a masters student in aerospace engineering, handles this end.

"I take a signal from George's electroencephalogram (the system that reads brain signals) process, interpret it and tell the robots what to do," he said. "We send information via bluetooth to the drones. We operate on near infrared frequencies to give 3D positioning of the drones."

Larsson said the research has been especially interesting to him as one of its younger members.

"It's gotten me really involved in the research of others," he said. "And, it has helped me synthesize what I want to do with my own work. It's definitely multidisciplinary. We have everything from robotics, neuroscience, dynamics and signal processing."

Reach the reporter at Arren.Kimbel-Sannit@asu.edu or follow @akimbelsannit on Twitter.

Like The State Press on Facebook and follow @statepress on Twitter.