For years, movies and shows have offered examples of radical futures where technology reacts to gestures and commands. But now, this very old vision is beginning to come into fruition in the form of artificial intelligence.

Considering the accuracy of math and science, many people believe only these subjects are needed to understand which ideas can have real-world applications. However, philosophy is our means of initially coming to those ideas.

Philosophy is not only useful in our AI conversations due to the ethical dilemmas that surround AI, but also useful in logically proving which concepts can be translated into computer science and mathematical algorithms.

The new ways that this technology is being implemented in society is a hot topic of conversation at ASU, where some professors claim that AI is reshaping the world. In addition, students are inadvertently having philosophical conversations alongside scientific ones by discussing how the ethical and legal infrastructures regarding AI are lagging behind.

How is it concluded that it’s possible to make artificial intelligence in the ways it is imagined to be? Are scientists able to define some kind of behavior as a concept accurately enough to be translated into algorithms?

These are questions that began in philosophy and are asked by philosophers and scientists tackling the frame problem, which analyses the difficulty to have precise predictive power of the action of a word.

Even though humans haven't solved the frame problem, mathematics has shown that people can translate some ideas into algorithms based on real and functional theorems. Considering examples like the frame problem, understanding which concepts could be translated into algorithms requires philosophical inquiry.

Jeffrey Watson, a lecturer in the School of Historical, Philosophical, and Religious Studies who conducts research in the philosophy of mind, said he believes some problems involving consciousness have found a place within philosophical discussion because they're unable to be targeted anywhere else.

“I wouldn’t want to do philosophy of mind without the input of science, but there's something else we need to do in addition to that, we need to understand the (logical) reasons," Watson said. "We need to understand the concepts.”

Watson's research is within the topic of emergence, which states that there are certain things with unique characteristics, typically a brain, where consciousness emerges. Emergentists view consciousness as a by-product of something else, but what causes emergence remains unknown.

He said that if this set of definitions under emergence is correct, then the potential consequences of artificial intelligence change.

If philosophy changes the understanding of what consciousness is then in turn, other subjects are affected.

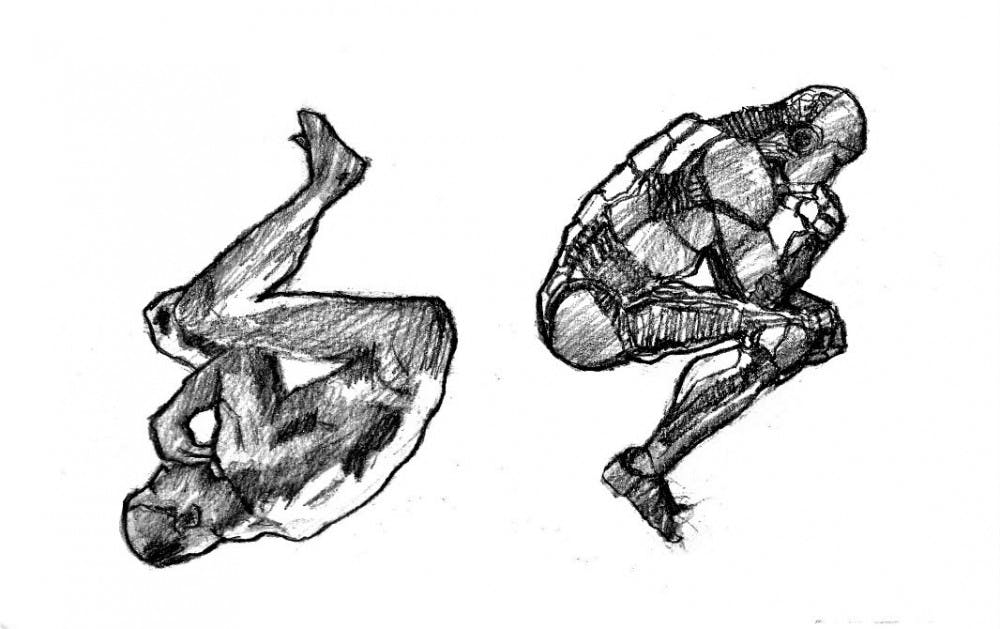

But with this understanding of what's unknown about consciousness, more questioning about the possibility of artificial intelligence gaining consciousness arise.

“If you accept that the brain is a case of emergence, then there could be another case (of emergence)," Watson said. "So you could have a conscious AI — it’s possible, but it’s not guaranteed.”

Many times in science, the predictability of results does not matter very much. Rather, the results of the experiment is what scientists are usually more committed to.

But in cases of artificial intelligence, it may be the case that some experiments end up creating conscious robots.

Predictability then, is very important, and logically proving those predictions as likely probabilities is a practice that has been developed and entertained in philosophy for a very long time, dating back to as far as Aristotle.

Watson said those without philosophical training can get mixed up very quickly, adding that, "Consciousness becomes responsiveness, and you can get pretty disastrous results from that."

Reach the columnist at msharm28@asu.edu and follow @MarkSharma96 on Twitter.

Editor’s note: The opinions presented in this column are the authors’ and do not imply any endorsement from The State Press or its editors.

Want to join the conversation? Send an email to opiniondesk.statepress@gmail.com. Keep letters under 500 words and be sure to include your university affiliation. Anonymity will not be granted.

Like The State Press on Facebook and follow @statepress on Twitter.