When a video appearing to depict Joe Biden spewing anti-trans hate speech surfaced online in early February, Joshua Garland was alarmed. In the brief clip, which was widely shared on social media, Biden delivers transmisogynistic rhetoric so bigoted and obscene, it isn’t suitable to publish here — and the lip-synced audio sounds just like the president’s actual voice.

“Even my mom was like, what is this?” said Garland, an associate research professor at ASU’s Global Security Initiative. “I was like, Mom, it’s not real. It’s a deepfake.”

Deepfakes are synthetically generated digital media created using artificial intelligence. The technology is most often used to make doctored porn, but fake video and audio of politicians and public figures has also emerged in recent years. Deepfakes are one of several online disinformation techniques Garland researches at the GSI’s Center on Narrative, Disinformation and Strategic Influence.

“In the last couple of years, I’ve really seen disinformation as being one of the greatest existential threats to our democratic society, and really society in general,” Garland said. “And so I wanted to know what I could do as a mathematician or computer scientist to aid in this problem.”

The GSI is ASU’s global security research arm. 70% of the initiative’s funding comes from the U.S. Department of Defense, Homeland Security and Intelligence Community; another 26% comes from “other government” sources, according to GSI’s website. These agencies, which are primarily concerned with national and international security, commission research in AI, cybersecurity and border enforcement technologies.

Garland and the GSI aren’t the only misinformation researchers at ASU who receive defense funding. Hazel Kwon, an associate professor at the Cronkite School of Journalism and Mass Communication, has been researching digital media and society for more than a decade. Kwon’s Media, Information, Data and Society Lab has received support from the Department of Defense for research on the dark social web.

“When I began in the field of digital media, we were all talking about good things, about digital technologies for democracy,” Kwon said. “But after Web 2.0 sort of penetrated society, since then it has been exploited by people who have malicious intent. So now the whole paradigm in our field has really shifted toward talking about the dark side effects of technology.”

In January, Kwon was recruited by ASU’s McCain Institute, a political think tank, to be part of a task force on “Defeating Disinformation Attacks on U.S. Democracy.” The task force has three additional ASU faculty members, including Scott Ruston, director of the GSI center Garland works at.

The disinformation task force is funded by the Knight Foundation and Microsoft. The McCain Institute does not receive Department of Defense funding. Instead, its long list of donors features household names from the technology, energy and finance sectors: Chevron, Walmart, AT&T, SpaceX, JPMorgan Chase, Raytheon and, perhaps most controversially, the Royal Embassy of Saudi Arabia, among others.

Why is the DOD so invested in studying and combating online disinformation? For that matter, why is Microsoft? Why has the field of misinformation studies exploded with government and corporate funding over the past few years? And what does that explosion mean for research agendas at public universities?

“It’s really hard to say if it’s good or bad,” Kwon said. “It’s there, and people are gonna use it, and we’re gonna study it anyway. One thing that’s clear is that the old model, the old theoretical framework — that open communication and this decentralized network for conversation, without having much control mechanisms, is ideal for public discussions — that paradigm seems to get very challenging.”

Anxiety

Before earning a Ph.D. in cinema and media studies from the University of Southern California, Ruston spent 10 years in active duty for the U.S. Navy. In 2017, he was hired by ASU’s GSI to study the rhetoric and narrative of Islamic extremist groups, such as Al Qaeda.

“This was also a time that there was growing attention on how nation-states were manipulating the information environments of other nation-states,” Ruston said. “You’ve probably heard lots of stories about Russian meddling in the 2016 election. So that was on people’s minds.”

The panic surrounding Russian interference in the 2016 presidential election via targeted online disinformation has frequently been dubbed “Russiagate” in popular media. Ruston maintains there is ample evidence the Internet Research Agency, an organization linked to the Russian government, ran fake accounts and astroturfing campaigns on American social media with the goal of “stoking political polarization.”

Journalists, media critics and data scientists have questioned liberal media narratives surrounding the Russiagate scandal for possibly exaggerating the scale of its impact. A January 2023 study from New York University found only 1% of Twitter users accounted for 70% of total exposure to the Internet Research Agency’s disinformation accounts; the study found “no evidence of a meaningful relationship between exposure to the Russian foreign influence campaign and changes in attitudes, polarization, or voting behavior.”

But even if the Russian influence campaign had no effect on voters’ decisions, the mere existence of the campaign prompted a wave of government attention to disinformation on social media. In January 2021, Ruston became the director of the GSI’s brand new Center for Narrative, Disinformation and Strategic Influence, which seeks to “support efforts to safeguard the United States, its allies, and democratic principles against malign influence campaigns,” according to its website.

“To date, the most frequent funder has been the Defense Department,” Ruston said.

Garland joined the center in February 2022. His team is currently developing an algorithm for determining whether a given selection of text, like a news article or social media post, was written by a human or generated by an AI, such as the popular ChatGPT. Believable AI-generated text could pose the same disinformation issues deepfakes do, and at a faster rate and larger scale than human writers are currently capable of.

“Generated text is becoming so similar to human text that there’s going to be this blurry ground where we can no longer differentiate it,” Garland said. “So we have to have a tool that allows you to tell if this was generated by a human or generated by an artificial intelligence.”

The Defense Advanced Research Project Agency, the DOD’s research arm, is funding the project. The ultimate goal is to publish a detection algorithm capable of identifying AI-generated text across a wide array of large language models, not just ChatGPT.

“Currently, ASU is number one in that metric,” Garland said. “For doing text detection, across this DARPA program, which is a very big DARPA program that includes many high-end universities and industry partners, we have the algorithm that’s doing the best currently. Which is pretty cool.”

Is AI-generated disinformation deployed by foreign actors already a large-scale threat? It’s difficult to know, said Garland, who couldn’t point to a specific example. There’s certainly already AI-generated content — and misinformation — on the internet. But Garland thinks that for DARPA, “the concern is in some ways very preventative,” anticipating hypothetical problems up to 20 years down the line.

The U.S. foreign intelligence apparatus is far from new, but in the social media era, its capabilities are evolving. The McCain Institute, for example, operates a web scraper that monitors sites like Facebook and Twitter for mentions of NATO.

In Kwon’s opinion, thinking about disinformation exclusively as a foreign political threat is a potentially problematic framing. In 2020, Americans experienced a more intimate side of the online information crisis in the form of COVID-19 conspiracy theories.

“In the past, when people talked about disinformation, it was really about foreign influencers, foreign intervention,” Kwon said. “Once you define disinformation actors as foreign institutions or operators, it’s really easy to sort of cut the boundary, right? This is the bad guy, and we are the victim. However, these days the domestic operation is so impactful.”

Motivation

Qian Li is a Ph.D. candidate in the Cronkite School who studies social media engagement and online social movements. A student of Kwon’s, Li spent the past two years researching the role of online media in influencing public opinion on the American gun control movement — particularly the role of non-traditional, grassroots sources.

“With the trend of neoliberalism in journalism also comes debate about the liberty of who can be a journalist,” Li said. “Due to the digitization and development of social media, everybody has the freedom to express their feelings or to report the news. That makes the public sphere much more digitized, and also complicated.”

Li and Kwon’s research focused on two oft-misunderstood media actors: activist media — in this case, gun control movement websites — and ephemeral media, a new term in the misinformation studies field.

Ephemeral media is low-quality, typically partisan media which is often uncredentialed and disappears over time. Imagine you click on a news article on Twitter, “and then you close it, and when you open it again, you find Error 404,” Li said. “You can’t see any information, just the link of the webpage. People will think it is because it’s fake news, or it is because of censorship. It gives people more imagination.”

There is evidence to suggest most misinformation on the internet is tied to ephemeral media and accessed via social media. Activist media is also typically disseminated through social media; users can have a hard time telling the difference between the two.

“Activists play an essential role in triggering public attention on social movements, but we should be careful,” Li said. “Their ideas, their agendas also will be affected by other information actors … We cannot ignore the interplay between activist websites with other kinds of media.”

The misinformation crisis the average American is most familiar with is probably vaccine-related conspiracy theories. Chun Shao, also a student of Kwon’s, is interested in how seemingly separate conspiracies build on each other through social networks.

“Even just from my personal social circle, there are some people who believe those types of conspiracy theories, discouraging them from getting a vaccine,” Shao said. “Or sometimes they don’t believe scientific findings. I think those types of misinformation are very very harmful.”

In 2022, Shao co-authored a study with Kwon which used AI topic-modeling techniques to identify connections between conspiracy theories about Bill Gates, COVID-19 and vaccine microchips. The caveat, of course, is that researching trending topics online “cannot measure whether people believe these conspiracies,” Shao said. “We only know whether they are talking about them.

“Sometimes, retweets may not mean endorsements,” he added with a chuckle.

That’s why differentiating between disinformation and misinformation is important. In an academic context, disinformation refers to false information presented with intent to deceive. Misinformation, on the other hand, is not always intentionally deceptive. Sometimes, inaccurate information just slips through the cracks. In the case of COVID-19, many so-called “misinformation actors” are normal people who legitimately want to spread their beliefs, blind — willingly or otherwise — to the factual inaccuracy of their positions.

“These domestic influencers and operators, their motivation is sometimes not extrinsic. It’s not for getting money,” Kwon said. “A big part of the disinformation industry is to make money, to make profit out of it. But another part of what we now define as disinformation mechanisms have a very intrinsic motivation. They really believe in it. In a way, their motivation is genuine.

“I think that’s where the real challenge comes in as a scholar,” she said. “To what extent should we consider it the free speech of people who have a genuine interest? To what extent should we consider it a part of the disinformation mechanism?”

Fracture

Garland knows a thing or two about the hazy and dangerous limits of free speech. Before coming to ASU, he researched online hate speech and citizen counterspeech efforts. In the process, he developed a somewhat cynical analysis of social media platform design.

“I think that the way the social media algorithms work, in some ways, promotes disinformation. In some ways, it promotes hateful rhetoric,” Garland said. “If they can show you something that’s going to be inflammatory, or highly engaged with, or mess with your emotions, or make you angry, or make you upset or whatever, they want you to see that first so that you keep scrolling.”

The problem, in Garland’s opinion, is a lack of incentives for platforms to change their algorithms. Most just remove posts and suspend or ban users who don’t follow their guidelines, rather than qualitatively adjust their engagement strategies.

“The big tech solution up until now has been to hide it, delete it, censor it, these kinds of things … and that just seems to make the problem a lot worse,” Garland said. “There’s been a lot of research that shows if you censor people, you ban them, you remove them from platforms, they simply move to other platforms where the problem’s way worse.”

The first users to migrate platforms are typically political extremists: neo-Nazis, antisemites and racists of all flavors. These users congregate on low-moderation social forums like 4chan and 8chan, the home of the Qanon conspiracy and the manifestos of several white supremacist mass shooters.

“When they come back from those cesspools,” Garland said, “they are just completely radicalized.”

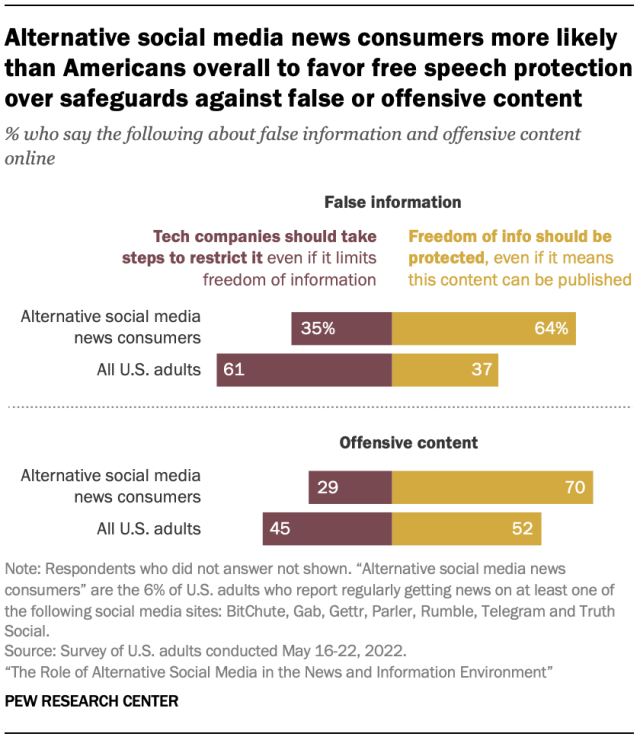

Since 2020, alternative social media sites like Parler, Gab and Truth Social have spawned from right-wing disdain for what some view as censorship on mainstream platforms, namely Twitter and Facebook. These alternative platforms amplify right-wing influencers while targeting audiences that relate politically to those who’ve been suspended. According to a 2022 report from the Pew Research Center, around 15% of prominent accounts existing on alternative sites had previously been banned from a different platform, often for spreading misinformation.

The majority of people on alternative platforms — spaces where there is far less exposure to traditional news media than on other platforms — say they are there to stay informed on current events, according to Pew. Prominent accounts on these platforms often peddle COVID-19 vaccine misinformation and transphobic rhetoric, and users are largely satisfied with the experience.

When anyone, from political extremists to everyday conspiracy theorists, can spread misinformation online, the outcome can be deadly. In the case of COVID-19, some anecdotal reports have suggested anti-vaccine rhetoric contributed to hundreds of thousands of preventable deaths. But when misinformation actors are pushed off social platforms, they often end up in information islands where their distrust of institutional knowledge and other biases are affirmed.

For these users, “censorship” is a badge of honor. Why would they trust public academics, much less the federal government, to study and police misinformation?

Intertwined

ASU’s McCain Institute is exactly the kind of organization people with anti-establishment beliefs are predisposed to distrust. When the institute announced its disinformation task force on Twitter, its post was flooded with replies characterizing the initiative as an authoritarian attack on free speech.

The McCain Institute isn’t a DARPA project or a research lab; it’s a nonprofit political think tank. Its funders aren’t U.S. government agencies; they’re mainly corporations and private entities.

Microsoft, one of the McCain disinformation task force’s funders, has recently invested heavily in disinformation analysts and removed Russian state-affiliated media from its app store. Many tech companies, including some McCain Institute donors, have poured resources into disinformation research as their credibility and favorability has floundered since the 2016 Russiagate scandal.

Walmart, one of the McCain Institute’s largest donors, has run ads on over two dozen sites with COVID-19 misinformation. The corporation has also advertised on RT, a Russian state-affiliated news site, effectively financing the exact disinformation apparatus the McCain Institute has criticized on multiple occasions.

In 2014, the McCain Institute received $1 million from the Saudi government, a state notorious for stifling press freedoms and running its own social media disinformation campaigns in the style of Russiagate. And that’s not the Institute’s only global scandal.

In 2019, the State Press broke the news that Kurt Volker, then the McCain Institute’s executive director, resigned from his position following reports that he assisted Donald Trump in pressuring the Ukrainian government to dig up dirt on Joe Biden’s son, Hunter Biden. Less than two weeks later, Trump’s first impeachment inquiry was already underway.

There’s reason enough to be skeptical of the McCain Institute. And if the free speech Twitter trolls looked a little closer, they might realize it’s for a different reason than the one they assume: Across the board, tech companies, universities and intelligence agencies are financially and logistically entangled with the same disinformation actors they publicly disavow.

“I’m of the school of thought that disinformation is a very, very old problem,” Garland said. “It was called propaganda for a long time, right? I think that the most basic term is lying. And lying has been around since forever.”

In parallel with the surge in government and private funding of national security-oriented disinformation research, another side of the field has emerged, sometimes called critical disinformation studies.

Critical scholars have called attention to the U.S. government’s historical manipulation of media narratives — George Bush, for example, infamously lied about Saddam Hussein having weapons of mass destruction to justify the 2003 invasion of Iraq. Others have suggested the trend of disinformation alarmism in legacy media amounts to a moral panic.

Journalist Joseph Bernstein coined the term ‘Big Disinfo’ to describe “an unofficial partnership between Big Tech, corporate media, elite universities, and cash-rich foundations” aimed at maintaining their legitimacy in an era of growing distrust in institutions.

Kwon and Ruston are united in their belief that public institutions can and should serve as guardians of the information ecosystem, guiding citizens toward factual information and away from fake news.

Garland, on the other hand, sees the misinformation crisis as a collective problem with a collective solution. Everyone will have to actively build their communities’ resilience through education, counterspeech and cooperation.

“I think that as time goes on, the disinformation engine is going to get worse and worse, and technological solutions aren’t going to work,” Garland said. “I think it’s a socio-technological problem, and I think we need technological innovations, but we also need people in the society to come out and help fight against disinformation through media literacy, through counter speech, these kinds of initiatives.”

Edited by Camila Pedrosa, Sam Ellefson and Greta Forslund.

This story is part of The Automation Issue, which was released on March 15, 2023. See the entire publication here.

Reach the reporter at ammoulto@asu.edu and follow @lexmoul on Twitter.

Like State Press Magazine on Facebook, follow @statepressmag on Twitter and Instagram and read our releases on Issuu.