As artificial intelligence spreads to new fields, not all of them are quite ready to embrace it. They need more time for security measures to be implemented, comprehensive ethical guidelines to be developed and regulatory frameworks to be put in place.

On Feb. 1, following their partnership with OpenAI, ASU began accepting proposals for potential applications of the program. One such proposal showcased in their Innovation Challenge update is intended for the College of Health Solutions, called BehavioralSim.

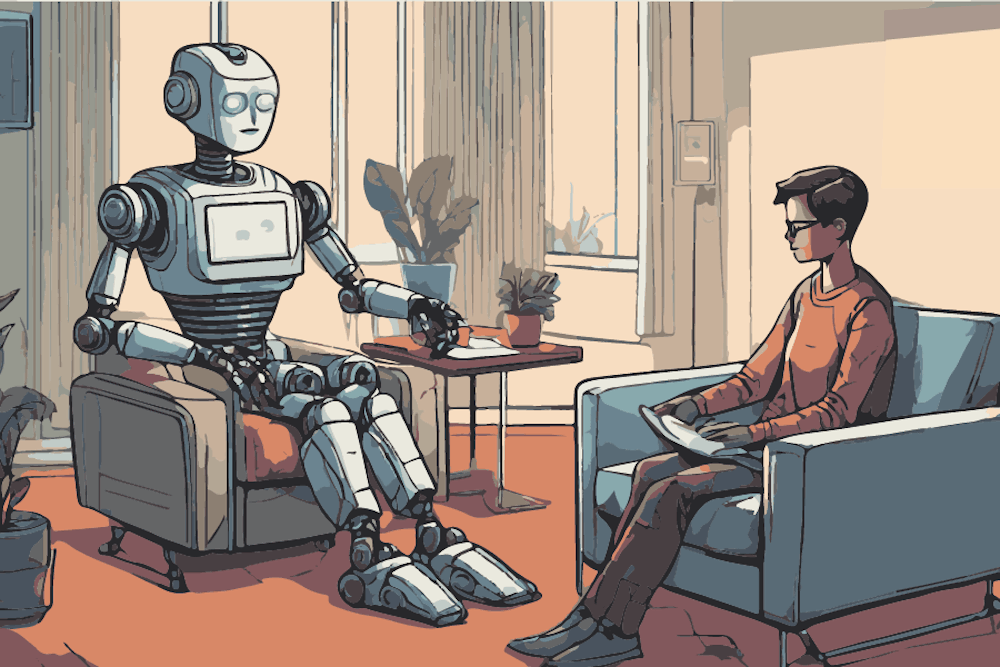

The initiative's goal with AI is to simulate counseling scenarios for students to practice their skills on, including person-centered techniques and practical skills. This raises serious questions about replacing human teachers and their professional experience with AI.

While utilizing this tool or similar AI bots, it's important to acknowledge the inherent limitations of such technology.

"They have a lot of flaws," ASU President Michael Crow said in a meeting with The State Press. "I've asked them really complicated social questions or cultural questions that I had one of them lying to me, you know, along the way."

Despite its ability to offer guidance and information, an AI bot cannot fully replace the expertise and nuanced understanding provided by human counselors. In times of heightened stress or when faced with psychologically intense questions, the efficacy of AI may be questionable.

Andy Woochan Kwon is a Licensed Associate Counselor and graduate of the Masters of Counseling program at ASU.

"A lot of your prognosis in counseling as a patient is dependent on the relationship that you formed with your therapist," Kwon said. "I believe the research shows us about (25)% of that prognosis can be attributed to the relationship that you have with the therapist."

The complexities of human emotions and experiences often require the empathetic support and personalized approach that only a trained counselor can offer. Furthermore, the dynamic nature of counseling necessitates the ability to adapt and respond in real time, a capability that may be lacking in AI-driven interactions.

"AI is a great tool, but it's not yet a replacement for how complex humans can be," Kwon said.

Additionally, it's essential to recognize that AI, like humans, possesses biases and limitations.

These biases may inadvertently influence the responses provided by AI bots, potentially impacting the quality and accuracy of the information offered. In situations requiring sensitivity and nuanced understanding, the reliance solely on AI may fall short of meeting the diverse needs of individuals seeking mental health guidance.

Until the risk of bias and false information is proven to be minimally impactful and mechanisms are in place to verify the diagnoses that AI replicates, it should not utilized in the mental health field.

Protecting patient privacy and ensuring data security are critical considerations in the integration of AI into counseling. However, an ethical dilemma arises when attempting to utilize patient data to enhance AI models without compromising confidentiality.

"The ethical code about confidentiality is there to ensure that clients feel safe in the therapy setting," Kwon said.

Currently, ASU's proposal guidelines state that ChatGPT Enterprise, the AI engine behind these projects, is not approved for FERPA-protected or other sensitive data.

This creates a paradox, where in order to improve itself, the BehavioralSim must access data it is currently not secure enough for. Crow was asked in a meeting with The State Press about how the success of OpenAI partnership programs, such as BehavioralSim, will be evaluated.

"If it doesn't solve the problem better, enhance the outcome better, help the student better, we're not going to do it," Crow said in the meeting. "So if it doesn't have the ability to be of any value at all, then it's not going to be doing so."

While this approach might be suitable for other fields, the consequences of mishandling mental health are too serious to risk. Is the mental health of students worth being an experiment for AI?

Navigating the complex terrain between data-driven insights and high ethical standards is essential for fostering trust and confidence in the use of AI technology in mental health and beyond. AI has not proven that it can hold up to the standards that are required for mental healthcare, and so it should be prohibited until it is improved.

Edited by River Graziano, Alysa Horton and Grace Copperthite.

Reach the columnist at dmanatou@asu.edu.

Editor's note: The opinions presented in this column are the author's and do not imply any endorsement from The State Press or its editors.

Want to join the conversation? Send an email to editor.statepress@gmail.com. Keep letters under 500 words, and include your university affiliation. Anonymity will not be granted.

Like The State Press on Facebook and follow @statepress on X.

Dimitra is a junior studying biomedical engineering and physics. This is her second semester with The State Press. She has also worked as a research assistant in Kirian Lab.